The Neocloud AI Engine of the future

Boost AI performance and cut costs with scalable, fully managed infrastructure powered by AMD GPUs and custom AI silicon.

Non-optimized reasoning inference

Reasoning on Awesome Neocloud Engine

The future of AI Infrastructure

Create the next wave of AI use cases powered by heterogeneous hardware.

Uncharted AI performance and an unstoppable developer ecosystem for emerging AI accelerators.

Optimized Inference

Lower AI TCO and deliver peak performance for end users.

Model Fine Tuning

Build with open source models for custom use cases at scale.

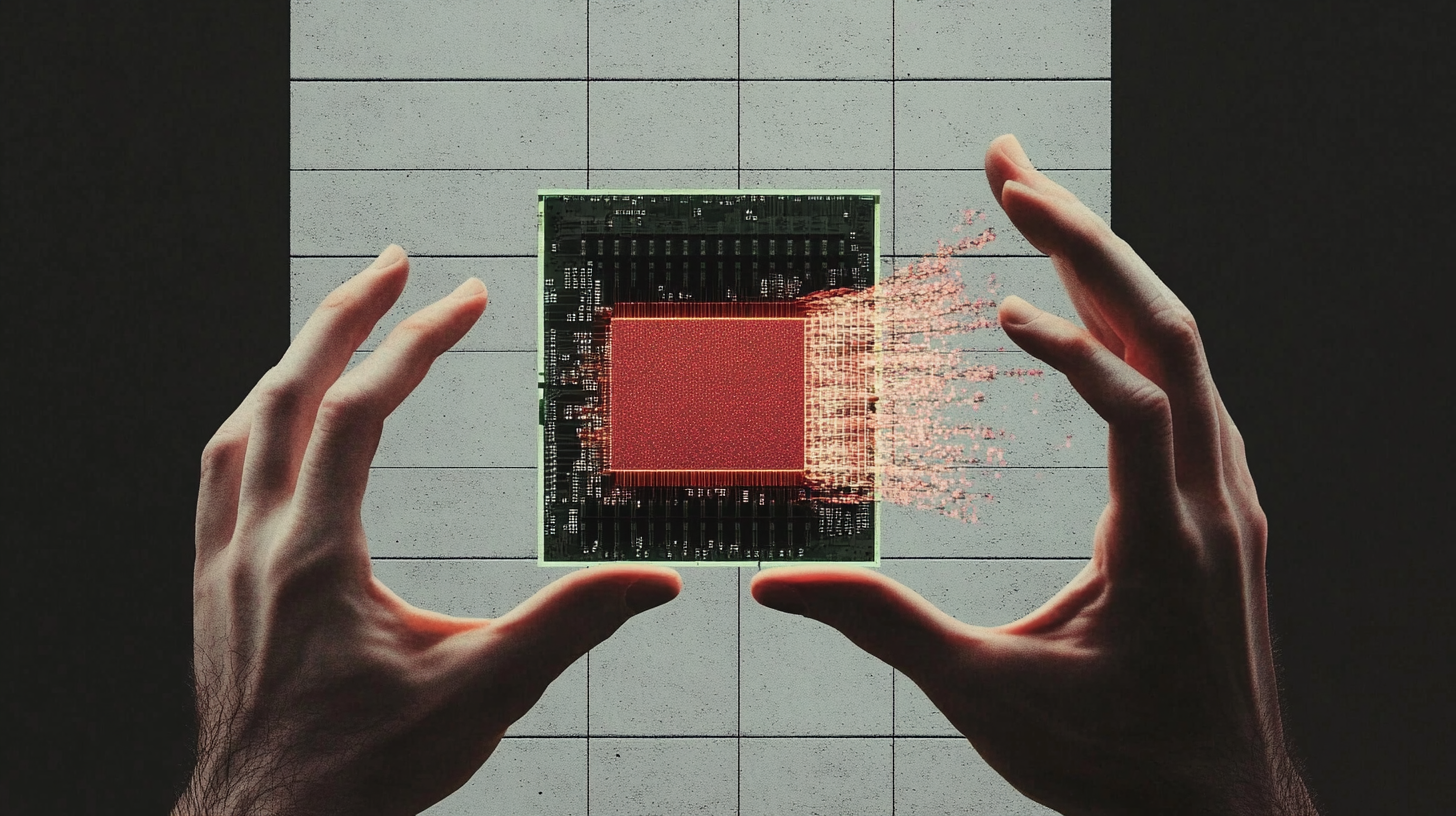

Hardware Acceleration

Deliver entirely new experiences unlocked by emerging AI ASICS.

From our team

-

·

How to run DeepSeek-R1 on an AMD MI300X

A step-by-step guide to run DeepSeek-R1-Distill on AMD MI300X using Shark-AI toolkit. The rise of open-source large language models (LLMs)…

-

·

How to run DeepSeek-R1 Reasoning on an Awesome AMD Node

DeepSeek-R1: The Self-Taught AI Redefining Reasoning—and How to Run It Yourself on an Awesome AMD MI300X The era of AI…

-

Pioneering INT4 on AMD MI300X: Slashed Inference Costs by 25%

In the cutthroat world of AI infrastructure, the difference between profit and loss often hinges on microseconds and pennies. When…

AI Hardware lock-in is over.

Enjoy Optimized Optionality.

We offer free full-service model migration from any GPU cloud in under 7 days, with guaranteed performance or you pay nothing. Pretty awesome, right?